In this post I am going to show how you can very quickly get a Twitter live feed streaming into your Oracle Database as a Service (DBAAS) instance. Although this example will work on any machine with an Oracle client and for any Oracle Database instance putting it on DBAAS makes it extremely easy to utilize our streaming Twitter data inside of other Oracle Cloud applications such as Business Intelligence Cloud Service (BICS).

Acknowledgments:

- Thank you to geniuses at Tweepy for making it so I don’t have to deal with oauth or really anything related to the Twitter API. I don’t know who you are but I love you! ♥

- Twitter so I never have to wonder what Kim or Kanye are thinking.

- My homey Przemyslaw Piotrowski (I don’t know him either but his examples sustain my laziness in coding) for writing a super helpful series called The Mastering Oracle+Python Series which I fully intend to finish someday.

- Who ever this dude is (https://pythonprogramming.net/twitter-api-streaming-tweets-python-tutorial/) whose example made this easy.

The great thing about DBAAS is I actually have access to the underlying server so I can take advantage of the operating system, and languages like python, as well as the database. Some might be offended by such interloping but I’m not one of them and as I said above there isn’t any requirement to do this on the database server I’m just taking advantage of a machine that has the cycles to spare.

I will assume that you already have a DBAAS instance up and running and are familiar with how to administer it and are also familiar with using tools like MobaXterm to connect to it.

At the time of this example our Database as a Service is running Oracle Linux 6.7 and 12.0.1.2 of the database with all the bells and whistles. I needed to install 5 things to make this work:

1. plliblzma to make it so I can get the latest EPEL repository without having to deal with “expletive” Error: xz compression not available message

wget https://kojipkgs.fedoraproject.org//packages/pyliblzma/0.5.3/3.el6/x86_64/pyliblzma-0.5.3-3.el6.x86_64.rpm yum install pyliblzma-0.5.3-3.el6.x86_64.rpm

2. Latest EPEL repository for this version of linux

wget http://download.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm yum install epel-release-6-8.noarch.rpm

3. Then I can install python-pip with ease

yum install python-pip

4. And then we can fetch Tweepy with equal ease

pip install tweepy

5. And last but not least Oracle’s python library for interacting with the database

pip install cx_Oracle

Now that we have all the chunks make sure you can import the stuff you need with python and not get any errors.

[oracle@testdrive-01 ~]$ python Python 2.6.6 (r266:84292, Jul 23 2015, 05:13:40) [GCC 4.4.7 20120313 (Red Hat 4.4.7-16)] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> import tweepy >>> import cx_Oracle >>>

If you have issue with cx_Oracle you most likely just need to make sure the Oracle environment variables are set via bashrc or however you like to set them.

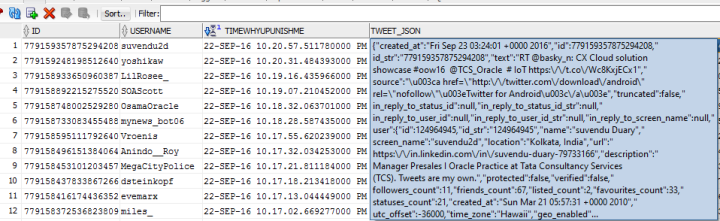

I created a table in my database to store the JSON document that is the API response

CREATE TABLE "TWITTER_USER"."EAT_MY_TWEET" ( "ID" NUMBER, "USERNAME" VARCHAR2(500 BYTE), "TIMEWHYUPUNISHME" TIMESTAMP (6) DEFAULT systimestamp, "TWEET_JSON" CLOB, CONSTRAINT "ENSURE_JSON" CHECK (TWEET_JSON is JSON) ENABLE )

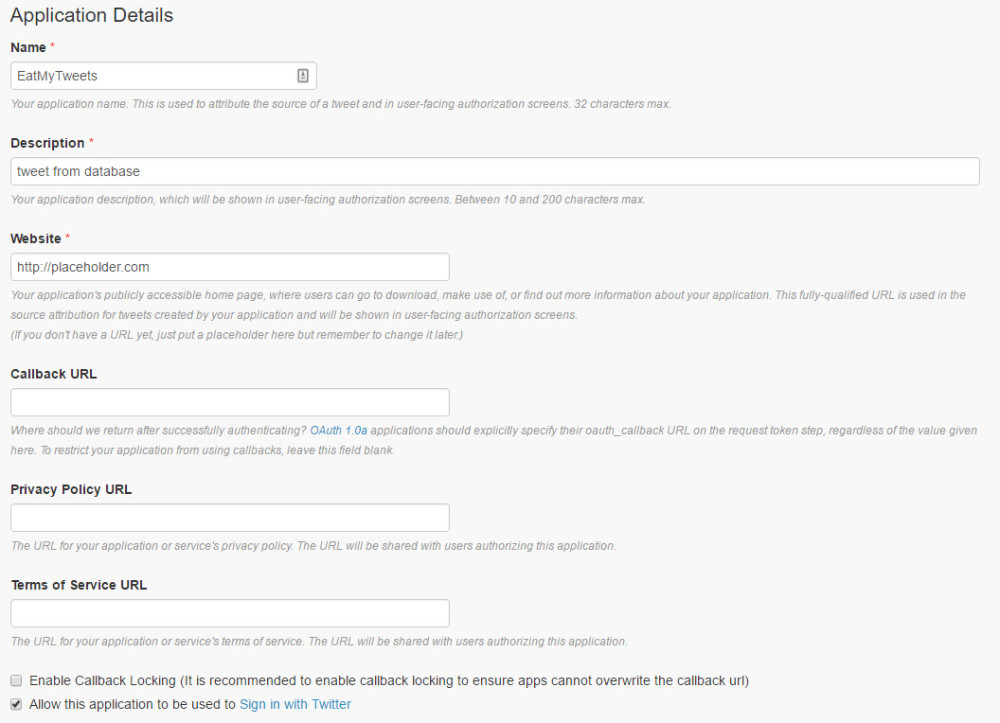

In order to use the Twitter API you will have to register your app with them here https://apps.twitter.com at which point they will give you the keys to make oauth work.

Then all we need to do is write (by which I mean copy paste and modify) a little python code and we are ready to rock:

#import libraries

from tweepy import Stream

from tweepy import OAuthHandler

from tweepy.streaming import StreamListener

import cx_Oracle

import datetime

import json

#connection string for database

conn_str='WHODAT/Ap@ssw0rd@localhost:1521/MyPluggableDatabseServiceName'

#get me a connection

conn =cx_Oracle.connect(conn_str)

#turn on autocommit

conn.autocommit=1

#object for executing sql

c=conn.cursor()

#clob variable

bvar=c.var(cx_Oracle.CLOB)

#twitter api application keys

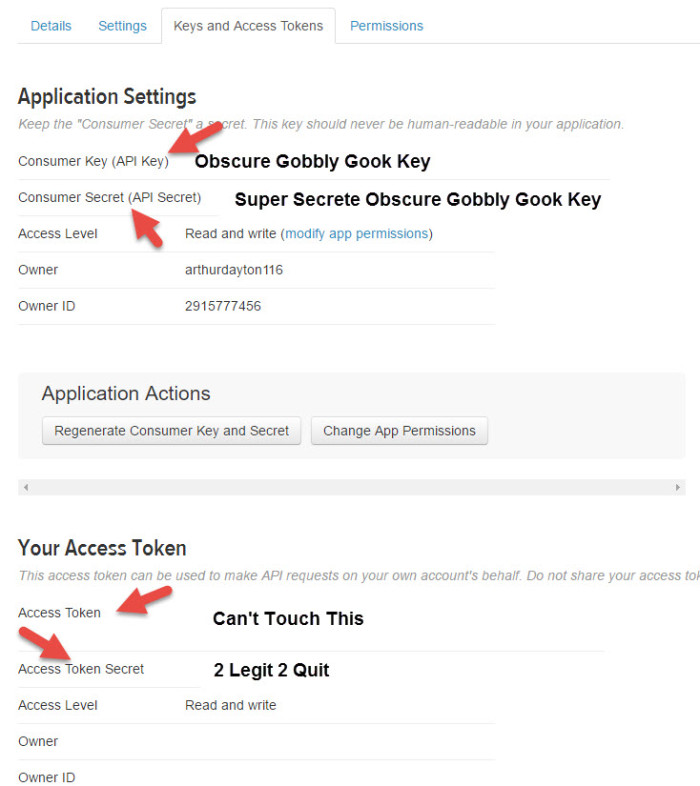

#consumer key, consumer secret, access token, access secret.

ckey='Dont'

csecret='Tell'

atoken='Anybody'

asecret='The Password'

#listen to the stream

class listener(StreamListener):

#get some

def on_data(self, data):

try:

#barf response insto json object

all_data = json.loads(data)

#parse out tweet text, screenname and tweet id

tweet = all_data["text"]

if (all_data["user"]["screen_name"]) is not None:

username = all_data["user"]["screen_name"]

else:

username = 'No User'

tid = all_data["id"]

#set clob variable to json doc

bvar.setvalue(0,data)

try:

#create sql string with bind for clob var

sql_str="INSERT INTO EAT_MY_TWEET (ID,USERNAME,TWEET_JSON) Values("+str(tid)+",q'["+username.encode('utf-8').strip()+"]',:EATIT)"

#insert into database

c.execute(sql_str,[bvar])

except Exception:

sys.exc_clear()

#watch tweets go by in console

print((username,tweet))

#in case you want to print response

#print(data)

return(True)

except Exception:

sys.exc_clear()

def on_error(self, status):

print status

print(data)

print sql_str

#log in to twitter api

auth = OAuthHandler(ckey, csecret)

auth.set_access_token(atoken, asecret)

#fire it up

twitterStream = Stream(auth, listener())

#what to search for (not case sensitive)

#comma separated for words use

# for hashtag

#phrases are words separated by spaces (like this comment)

twitterS.filter(track=["ProveFilterWorks,Oracle,Vlamis,OBIEE,BICS,DVCS,Data Visualization Desktop"])

I named my file stream.py so to execute I just fire it up with nohup –

nohup python -u /home/oracle/stream.py>/home/oracle/stream.out 2>/home/oracle/stream.err &

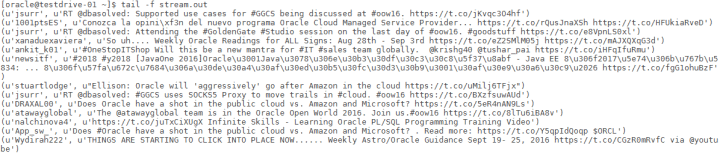

and if I tail my stream.out file with tail -f stream.out I can watch the tweets go by as they are inserted into the database

now that I am inserting the json document that the twitter api sends back to me into the database

I can use Oracle Database 12c support for json to expose the document in my table as a view using the following SQL

CREATE OR REPLACE FORCE EDITIONABLE VIEW "TWITTER_USER"."LIVE_TWITTER_FEED" ("CST_DATE", "UTC_DATE", "UTC_HOUR", "UTC_MINUTE", "ID", "CREATED_ON", "SCREEN_NAME", "LOCATION", "FOLLOWERS_CNT", "FRIENDS_CNT", "LISTED_CNT", "FAVOURITES_CNT", "STATUSES_CNT", "RETWEET_CNT", "FAVOURITE_CNT", "URL", "PROFILE_IMAGE_URL", "BANNER_IMAGE_URL", "HASHTAGS", "TWEET", "EMT_TIMEWHYUPUNISHME") AS

SELECT cast(TO_TIMESTAMP_TZ(REPLACE(upper(b.created_on),'+0000','-00:00'),'DY MON DD HH24:MI:SS TZH:TZM YYYY') at Time zone 'CST' as date) CST_DATE,

cast(TO_TIMESTAMP_TZ(REPLACE(upper(b.created_on),'+0000','-00:00'),'DY MON DD HH24:MI:SS TZH:TZM YYYY') as date) UTC_DATE,

cast(to_char(cast(TO_TIMESTAMP_TZ(REPLACE(upper(b.created_on),'+0000','-00:00'),'DY MON DD HH24:MI:SS TZH:TZM YYYY') as date),'HH24') as number) UTC_HOUR,

cast(to_char(cast(TO_TIMESTAMP_TZ(REPLACE(upper(b.created_on),'+0000','-00:00'),'DY MON DD HH24:MI:SS TZH:TZM YYYY') as date),'MI') as number) UTC_MINUTE,

a."ID",b."CREATED_ON",a."USERNAME",b."LOCATION",b."FOLLOWERS_CNT",b."FRIENDS_CNT",b."LISTED_CNT",b."FAVOURITES_CNT",b."STATUSES_CNT",

b."RETWEET_CNT",b."FAVOURITE_CNT",b."URL",b."PROFILE_IMAGE_URL",b."BANNER_IMAGE_URL",b."HASHTAGS",b."TWEET",

a.TIMEWHYUPUNISHME

FROM EAT_MY_TWEET a,

json_table(tweet_json,

'$' columns(

id varchar(50) path '$.id',

created_on varchar2(100) path '$.created_at',

screen_name varchar2(200) path '$."user".screen_name',

location varchar2(250) path '$."user"."location"',

followers_cnt number path '$."user".followers_count',

friends_cnt number path '$."user".friends_count',

listed_cnt number path '$."user".listed_count',

favourites_cnt number path '$."user".favourites_count',

statuses_cnt number path '$."user".statuses_count',

retweet_cnt number path '$.retweet_count',

favourite_cnt number path '$.favorite_count',

url varchar2(250) path '$."user"."url"',

profile_image_url varchar2(500) path '$."user".profile_image_url',

banner_image_url varchar2(500) path '$."user".profile_banner_url',

hashtags varchar2(500) format json with wrapper path '$.entities.hashtags[*].text',

tweet varchar2(250) path '$.text'

-- nested path '$.entities.hashtags[*]' columns (ind_hashtag varchar2(30) path '$.text' )

)

) b

Then I can analyze the real time twitter data I’m interested in using a simple sql statement

SELECT * FROM LIVE_TWITTER_FEED ;

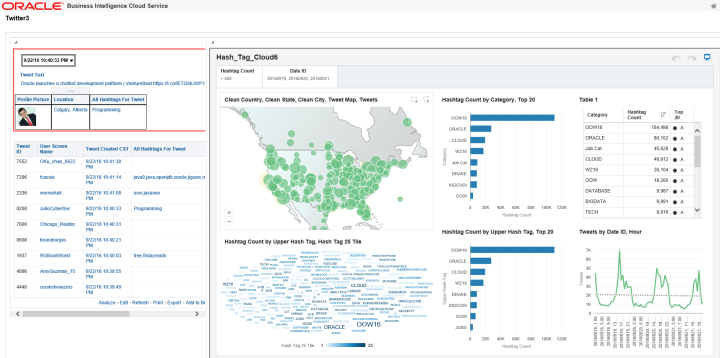

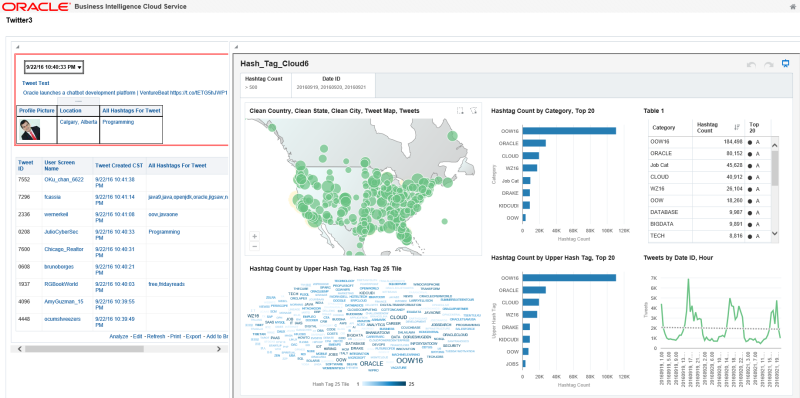

and while being able to hit real time twitter data with SQL is cool the real goal is being able to surface that data inside of our BI tools. Here we combine Answers and Visual Analyzer on a dashboard to show latest tweets and aggregate information over time utilizing Oracle Business Intelligence Cloud Service (BICS)